Vibe Coding: Is it a Fad or the Future of Coding?

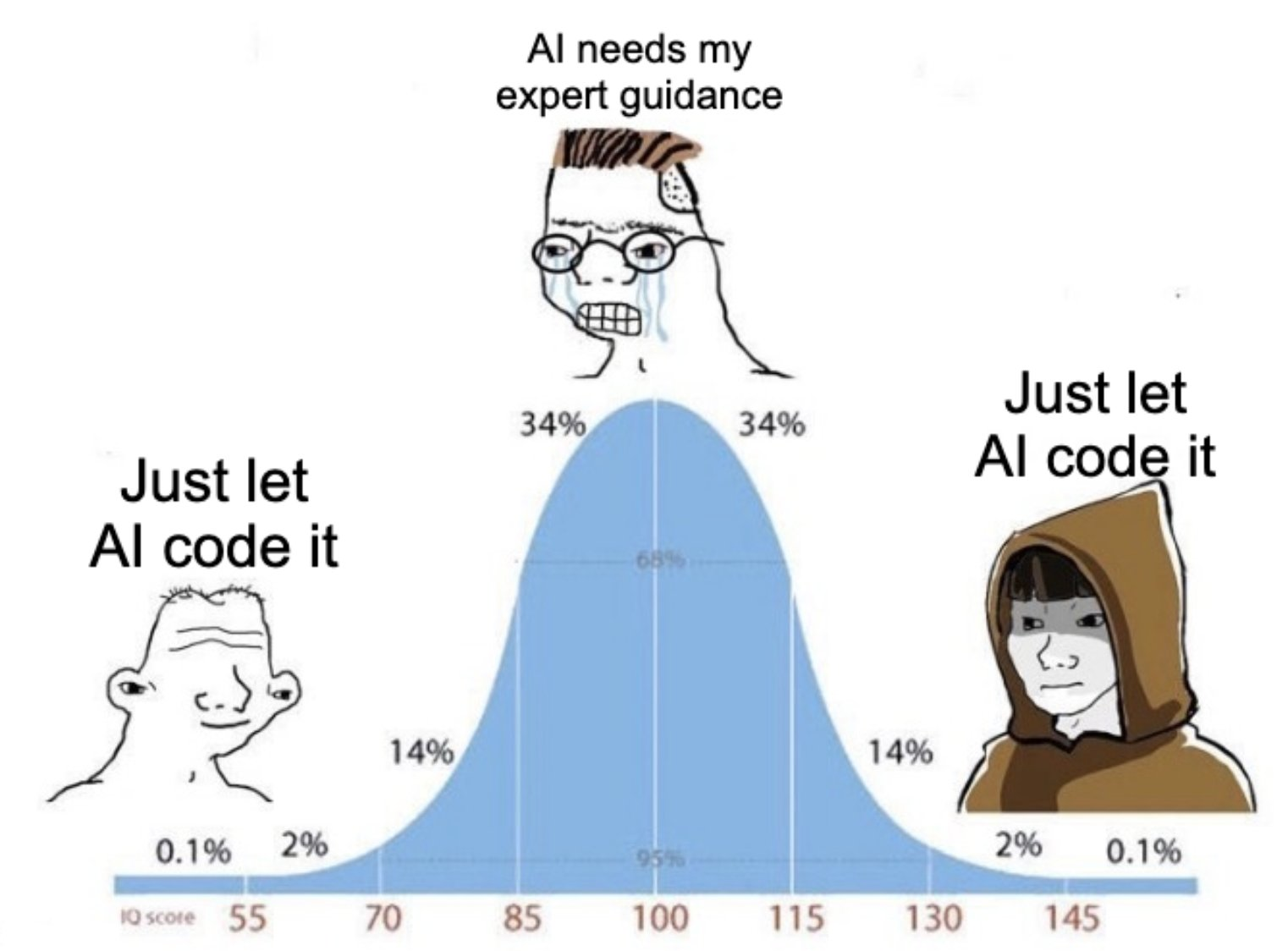

Coined by Andrej Karpathy in early 2025, “vibe coding” describes a hands-off, LLM-driven approach to software development where the programmer acts more like a product director than a traditional coder. Instead of writing detailed logic, developers guide AI with broad prompts, accept most of its output, and iterate based on results—prioritizing speed and experimentation over structure or precision. Proponents see it as democratizing coding and accelerating prototyping. Critics argue that it’s reckless, potentially insecure, and undermines engineering rigor. So is it a paradigm shift, or just another tech hype cycle?

Check out 🔥🛸 Fireship’s humorous take on vibe coding.

‘Fireship has really taken that whole “during a gold rush, sell shovels” concept to heart.’ (credit to Dean Fletcher).

Vibe Coding Meaning

“Vibe coding” is an intriguing natural‐language experiment in AI‐driven (software) development, but it’s far from a silver bullet. Here’s how I see it:

1. Speed vs. Substance

- Pros: You can spin up prototypes astonishingly fast. Instead of wrestling with syntax or boilerplate, you hand off the grunt work to the LLM and pivot immediately when something doesn’t fit your high‐level or “big-picture” vision.

- Cons: You’re essentially trading deep understanding for velocity. When you don’t know exactly how the code works, debugging and extending it become a game of guesswork—especially when subtle business rules or edge cases crop up.

2. Lowering the Barrier, Raising the Risk

- Pros: Novices can build “real”, feeling-based apps without years of training in software engineering fundamentals, and it democratizes software creation.

- Cons: Security, performance, and maintainability often fall through the cracks. An AI will happily stitch together insecure patterns or inefficient queries unless you specifically call them out. Who’s accountable when those vulnerabilities go live?

3. The Feedback Loop

- Pros: Iterative “tell me to improve this” cycles can refine UI behaviors, generate test scaffolding, or massage data transformations more fluidly than traditional pair programming.

- Cons: Without rigorous tests and reviews, you risk accumulating inferior “black‐box” and “buggy” code. Over time, the mental model of your system drifts further away from reality, making refactors a nightmare.

4. Skill Atrophy vs. Skill Growth

- Pros: By offloading repetitive chores, you focus on architecture, UX, and user flows—skills that arguably matter more than memorizing every language idiosyncrasy.

- Cons: If you’re not careful, you stop learning the underlying abstractions. Next thing you know, you can’t write a loop or trace an algorithm yourself—you just know how to “prompt” the AI.

Bottom line: I share the ambivalence of many developers. Vibe coding can supercharge creative prototyping, but it shouldn’t replace disciplined engineering. If you try it:

- Lock down tests and security audits Use coding paradigms like Test-Driven Development (TDD), and run “micro”, encapsulated feature tests, like in Behavior-Driven Development (BDD), before pushing code. By following this approach, developers can ensure that their code is reliable, stable, and functions as expected.

- Keep your own code checkpoints—understand every chunk, function, and code block before you ship.

- Use it as an accelerator, not a crutch: learn from what the AI suggests, rather than mindlessly accepting it.

Embrace the vibe—but don’t forget that your strongest asset is your own critical eye.

Skills Still Matter When You’re Vibe Coding

I feel like it’s a tool that assists you, but you still need the underlying skill set—or at least basic knowledge—to use LLM-generated code in a production or MVP setting. As the project increases in complexity and features, this becomes even more critical.

The more complex the needs, features, and codebase are, the more context you have to continually provide the LLM, unless you keep features very compartmentalized and isolated (which is where Docker and concepts like OOP come in), but if you don’t really know how these underlying technologies work, or interact, the whole “house of cards” can easily come crashing down.

I liken it to sanding and other manual labor like body work. Sure, a powered tool may help speed up the process, but if you don’t know how to properly apply pressure, hold the tool at the right angle, for the right amount of time, or don’t know what grit sandpaper to use, then you’ll end up causing damage. You need to understand the basics of sanding, with a piece of sandpaper in your hand, before you can graduate to using power tools.

Vibe coding is only as solid as the foundation you build under it:

-

Fundamentals First

- Just as you wouldn’t pick up a pneumatic sander, before learning at least the basics of hand sanding, so you can’t delegate architecture, dependency management, or state isolation to an LLM you barely understand.

- Without a mental model of Docker networking, OOP design, module boundaries, and data flow, your prompts become scattershot. You end up firefighting mysterious bugs instead of steering the ship.

-

Context Is Currency

- LLMs “forget” beyond their context window. As your MVP grows, you must re‑supply module APIs, type definitions, service contracts, environment variables… or watch the AI begin to “hallucinate” broken code.

- Effective compartmentalization (via Docker, micro‑frontends, clear interfaces) mitigates that—but only if you’ve designed those boundaries yourself.

-

Tool, Not Replacement

- Think of AI as a “turbocharger” for productivity, and not the driving “engine” for it. You still need to know when to shift gears, how much fuel (tests, linting, CI) to feed it, and when to pull over and inspect under the hood.

- Use AI to scaffold boilerplate, generate tests, or propose refactors—but validate every line for security, performance, and maintainability.

-

Escalating Complexity

- Early prototypes thrive on “give me CRUD for X.” But once you add feature flags, version migrations, multi‑tenant auth, or real‑time updates, the safety net vanishes unless you, not the AI, own the design.

- You’ll hit diminishing returns the moment you can’t mentally trace the call stack or data flow without retracing fifty AI‑generated functions.

Takeaway: Vibe coding accelerates you up a gentle slope—but when the terrain gets rocky, you need climbing gear. Invest in core skills (architecture, testing, security reviews, modular design) first. Then let AI be your fast‑moving assistant, not your blindfolded “co‑pilot” (no offense to Microsoft or Github 🤓).

Outdated Training or “Knowledge Gap” in LLMs

It’s a good idea to also keep in mind that LLMs often have outdated training models, and take a long time to “adjust” to code deprecations (like Angular Universal now being called Angular SSR, ‘version:’ in Docker compose YAML files no longer being used, or writing good Zig code), or even just basic feature updates to languages and libraries that are well-documented. You’re often left trying to get the LLM up to speed with recent changes. If you’re unaware yourself of the latest documentation, you’ll be left scratching your head—wondering why the code doesn’t run.

I’ve yet to see an LLM write Zig code that can compile and actually do more than a simple

"hello, world!"app.

The ‘Achilles’ Heel’ of Vibe Coding

LLMs’ stale knowledge base is a real Achilles’ heel:

-

Training Cutoffs = Knowledge Gaps

- Most models are frozen on code and docs from months (or years) ago. They’ll cheerfully spit out deprecated patterns—like

version:in Docker Compose—because that’s what they “know”, and not what’s best practice today. - You end up in a bind: you’re either constantly correcting the AI (“No, use

Angular SSR, not Universal”) or debugging cryptic errors when it leans on obsolete APIs.

- Most models are frozen on code and docs from months (or years) ago. They’ll cheerfully spit out deprecated patterns—like

-

Onboarding the LLM vs. Onboarding Yourself

- If you’re unfamiliar with recent changes, your prompts lack the specificity to steer the LLM toward modern syntax. You’ll spend more time teaching the AI than writing or fixing code yourself.

- Worse, you can’t tell when it’s hallucinating new “features”, or sequences of operations, that don’t exist.

-

Language-Specific Blind Spots

- Emerging or niche languages—Zig, Crystal, even newer Rust features—will barely register in the model. Getting anything beyond boilerplate “Hello, World!” is asking for trouble.

- For those ecosystems, your only recourse is manual reference to up‑to‑date docs or community forums.

-

Workarounds and Mitigations

- Retrieval-Augmented Prompts: Feed the LLM live docs or changelogs alongside your prompt so it has access to the latest specs.

- CI Gates & Linters: Automate checks for deprecated syntax, security issues, and style guide violations. Catch the AI’s misfires before they reach production.

- Pair with Human Review: Reserve the AI for scaffolding and brainstorming, but mandate that every snippet passes through a knowledgeable engineer’s hands.

Bottom line: AI feels magical until you hit the bleeding edge. Its usefulness plummets once you depend on the latest features or less-common languages. Treat the LLM as a convenience for well‑trodden paths, not a truth oracle—and always keep the official docs and your own expertise front and center.

Vibe Coding Tools

Here’s how people typically outfit a “vibe coding” workflow—with tools that lean into conversational, iterative code generation and help you manage context as your project grows:

-

Chat‑based AI Assistants

- GitHub Copilot Chat (in VS Code or JetBrains): full‑blown chat UI on your codebase, can answer questions, generate functions, refactor, and even propose tests.

- ChatGPT (with Code Interpreter / Advanced Data Analysis): lets you upload snippets or small repos and iteratively refine outputs together.

- Amazon CodeWhisperer Chat: similar to Copilot Chat but tied into AWS docs and IAM‑aware suggestions.

-

IDE/Editor “Ghostwriter” Plugins

- Tabnine Compose: multi‑line and whole‑file completions powered by LLMs, plus a chat panel to tweak generated code.

- Replit Ghostwriter: built into Replit’s online IDE—great for quickly spinning up full projects via prompts.

- Kite Pro: AI‑driven completions plus documentation look‑up, though more lightweight than a full chat.

-

Local LLM Runtimes & Retrieval

- Ollama + Code Llama or Mistral on your machine: no cloud lock‑in, can feed local docs, changelogs, and keep the model’s context tight.

- LangChain / PromptFlow: orchestrate retrieval‑augmented pipelines so your LLM always has up‑to‑date API docs, design patterns, or your own code snippets.

-

Prompt & Context Management

- PromptBase or Flowise: build, version, and test prompt templates so you don’t have to rewrite the same “generate Angular SSR route” prompt a dozen times.

- MemGPT or Trickle: tools that automatically summarize and index your prior chats and code outputs, letting you recall earlier “vibe” sessions with a single query.

-

CI/CD & Quality‑Gate Integrations

- DeepSource, Snyk, or SonarCloud bots: run scans on AI‑generated code immediately, flagging vulnerabilities or deprecated patterns before they merge.

- GitHub Actions + custom scripts: enforce linting, test coverage, and security scans on every PR—even if that PR was AI‑sourced.

-

Containerized Sandboxes

- Docker Compose / Dev Containers: spin up an isolated environment per feature so you can “vibe” on a micro‑service without polluting your main branch.

- Play with Docker Playground: quick one‑off testbeds where you paste AI code, see what breaks, iterate.

At their core, they’re any integrations or utilities that:

- Let you talk to your code, not type it.

- Automate context management—so your LLM doesn’t “forget” type definitions, environment settings, or module boundaries.

- Guardrails that catch AI slip‑ups (security, deprecation, style) early.

- Sandboxing to experiment fearlessly.

Pick the combo that fits your stack—and always layer in tests and reviews on top of the AI‑generated output.

Github Copilot vs Microsoft Copilot

Both Github and Microsoft Copilot share a name, and both tap into large language models, but they’re distinct products aimed at different users:

-

Scope & Integration

- GitHub Copilot lives inside your editor or IDE (VS Code, JetBrains, Neovim, Visual Studio, etc.) and is wired into your codebase. It’s purpose‑built to autocomplete code, translate comments into functions, suggest refactorings, even generate tests—all in the context of the files you’re editing (Plain Concepts).

- Microsoft Copilot (often called Microsoft 365 Copilot or Copilot in Windows) is embedded across Office apps (Word, Excel, PowerPoint, Outlook, Teams) and the Windows shell itself. Its focus is on drafting documents, summarizing data or meetings, automating workflows, and surfacing organizational data via Microsoft Graph (Dev4Side, TechTarget).

-

Primary Use Cases

- GitHub Copilot → Developers writing code. It “knows” your code’s context, types, dependencies, and can crank out boilerplate or even complex algorithms with a few keystrokes.

- Microsoft Copilot → Knowledge workers creating content and managing data. Need a pivot table analysis in Excel? A slide deck outline? An email draft? Copilot handles that.

-

Underlying Tech & Data Access

- Both lean on OpenAI’s GPT‑family models (Codex/GPT‑4), but Microsoft 365 Copilot layers in Microsoft Graph so it can pull in your organization’s documents, chats, and emails when generating responses (TechTarget).

- GitHub Copilot is trained on public code (including the massive GitHub corpus) and uses that to predict what you’ll type next—it doesn’t natively tap into your private Office data.

-

Pricing & Licensing

- GitHub Copilot: ~$10/user per month (individual), with business tiers available.

- Microsoft 365 Copilot: ~20 pppm, but it’s still Office‑centric.)

Bottom line: They’re “cousins” under the same Copilot umbrella, but GitHub Copilot is your AI pair‑programmer in the IDE, while Microsoft Copilot is your AI assistant across Office and Windows for document/report/data tasks.

What is an LLM in Generative AI?

A Large Language Model (LLM) is a program trained on huge amounts of text to predict the next token (piece of a word). That simple skill—predicting one token after another—lets it write emails, explain code, draft SQL, summarize docs, or chat. If you’re wondering llm what is in practice, think: “a very fast autocomplete that learned patterns from billions of sentences.”

How it works (plain version):

It doesn’t “look up facts” like a database. It generalizes patterns it saw during training. When it lacks context, it can guess—sometimes well, sometimes poorly.

What is an LLM good for?

- Turning messy text into cleaner text (summaries, rewrites, explanations).

- Producing first drafts (emails, docs, unit tests).

- Translating between natural language and code/config (regex, SQL, API calls).

- Tutoring: explaining a concept in steps with examples.

Where it struggles (know these limits):

- Hallucinations: confident but wrong details when your prompt is vague or asks for niche facts.

- Freshness: unless connected to tools/search, it may not know latest versions or news.

- Long contexts: it can lose track in very long inputs; important details should be restated near the end.

- Exactness: strict math, formal proofs, or specs without tests can go sideways.

How to get better answers:

- Provide concrete context (versions, interfaces, error messages).

- Set constraints (no new deps, time/memory limits).

- Ask for a plan first, then code.

- Request citations or doc links for non-obvious APIs.

- Define acceptance checks (“must return

{items,total}; handle empty results”).

TL;DR: What is an llm?

The short answer is “a next-token predictor that’s become a general text engine.” It’s powerful, but it’s not a source of truth. Treat outputs as drafts; verify with tests, docs, and small run-time checks.

LLM Vibe Coding Tutorial

Here’s a practical, no-BS guide focused on using LLMs without getting burned—copy/paste hygiene, prompts that actually work, testing, and not getting lazy.

1) Ground rule

LLMs are confident, not always correct. Treat every answer as a draft. Your job is to verify, not believe.

2) Copy-paste hygiene (the “no-regrets” routine)

- Read first. Say out loud what each block does. If you can’t explain it, don’t paste it.

- Paste in a sandbox (temp file or throwaway branch). Never straight to main.

- Run a tiny test immediately. Don’t wire it into the whole app yet.

- Add checks: a couple asserts or

console.log()s on inputs/outputs. - Diff and comment your own change: why this code, risk, fallback.

- Name your commit with intent (“Add X; known limitations: …”).

TIP: Try to use a coding language with strict typechecking (like TypeScript or Go) whenever and wherever possible. This helps because even if the LLM doesn’t have the full context, and “hullicinates” some bad code, you can at least catch the error quickly, and early, while it’s still in development—this is especially true for larger projects spanning multiple files and directories.

Landmines

- Code that imports libs you don’t use (or don’t know how to use).

- Hidden side effects (globals, singletons, patching prototypes).

- “Magic” config changes (

tsconfig,eslint,babel) snuck into the answer.

3) Prompts that actually help (fast template)

When you ask for code, include these:

- Goal: what you want.

- Context: framework + version + runtime (e.g., Node 20, Python 3.11).

- Interfaces / data shape: types or example JSON.

- Constraints: perf, readability, no new deps (if true), licensing, security/compliance.

- Error or test output (if any): paste the exact text/stack.

- Acceptance criteria: how we’ll know it works (measurable).

- Budget & hardware: time/money caps, CPU/RAM/GPU, hosting limits.

- Assumptions & non-goals: what we’re not doing.

- Output format: “minimal diff” or “one file only,” with line count cap.

Example

Here’s a simple example of what an LLM prompt should include:

- Goal: paginate a

/usersendpoint. - Context: Express on Node 20, Postgres 15.

- Data:

User { id:number, name:string }. - Constraints: no ORM, keep SQL simple, no new deps, pass OWASP input rules.

- Acceptance: returns

{ items, page, pageSize, total };totalfromCOUNT(*); ≤40 lines handler + SQL shown; handles empty result and page beyond end. - Budget & hardware: Can only invest <1 hour of dev time daily, must run on 0.5 vCPU / 512MB RAM container.

- Assumptions & non-goals: sorting by

idonly; no cursor pagination; no full-text search. - Output format: show SQL first, then the Express handler, as a minimal diff against

routes/users.ts.

IMPORTANT: Tell the LLM your budget (time + money) and hardware limits. Ask for stack advice under those limits, not “best overall.” Require a short option trade-off table if multiple stacks are proposed.

Why this works: you reduce guessing. Less guessing = fewer hallucinations, and more correct results.

Ask the model to do this first (guardrails):

- “List unknowns and ask 3 clarifying questions before coding.”

- “Propose a 5-step plan; then wait.”

- “Cite docs for each API used (with version).”

- “Give a confidence score and 3 risks with quick checks.”

4) Ask for a plan before code

Bad: “Write it.” Better: “Give a 5-step plan first, then code. Flag risks and assumptions.” If the plan looks shaky, fix the plan. Then ask for code.

5) Make the model explain, not just dump code

Use it like a tutor:

- “Explain this error like I’m new, with a 10-line example.”

- “Show the happy path and one common failure path.”

- “List 3 mistakes people make with X and how to avoid them.”

- “Quiz me with 3 questions and give answers after I try.”

If you don’t understand, you won’t debug it later. Slow is smooth; smooth is fast.

6) Debugging with an LLM (don’t ask for a magic fix)

Give it a Minimal Repro:

- One file, hardcoded input, clear steps to run.

- Paste the exact error + stack trace.

- Ask: “Give 3 likely causes ranked, and for each, a 1-minute check I can run.”

This gives you a decision tree, not a wall of code.

7) Testing after you paste (bare minimum)

- Smoke test: run it with 2—3 typical inputs + 1 nasty edge case.

- Assertions: check the return shape and a key invariant (e.g., sorted order, length matches).

- Runtime check: log timings or one memory reading if perf matters.

- Guardrails: if you’re in JS/TS, add a simple runtime schema (even one

ifcheck is better than nothing).

Landmines

- “It compiled” ≠ “It works.”

- Skipping the weird case (empty list, null values, huge input).

8) Hallucination red flags (spot them fast)

- APIs that don’t exist, wrong import paths, or wrong method names.

- Vague steps: “just configure X” (but no exact lines).

- Old or mixed versions (“use AngularHttpModule”…which isn’t a thing now).

- Glossing over auth, CORS, or DB transactions.

- “Should work” language without proof (no tests, no command to run).

Counter-move: “Cite docs for each API used. Include version numbers.” If it can’t, downgrade trust.

9) Version + environment discipline

Always tell the model:

- Language + version (Node 20 / Python 3.11).

- Framework + version (Angular 18, Express 5).

- Build/runtime (browser vs server, ES modules vs CJS).

- OS if relevant.

Ask for version-specific code. Saves hours.

10) Security + privacy sanity

- Never paste secrets, tokens, or real customer data.

- Ask for code that keeps secrets in env and redacts logs.

- If it touches auth/crypto, ask for a threat list: “List 5 risks and mitigations.”

11) Use LLMs to learn concepts (not just code)

Prompts that work:

- “Explain X in plain words, then show the math/algorithm, then a 10-line example.”

- “Compare X vs Y. When would I choose one over the other? Table please.”

- “Show how data flows through my code. Start at the HTTP request and end at the DB write.”

You want mental models, not just snippets.

12) Review checklist for LLM output (score 1—5)

- Correctness: compiles, runs, passes your tiny tests.

- Compatibility: matches your versions and environment.

- Clarity: names, comments, no magic.

- Complexity: simplest thing that can work?

- Coverage: edge cases considered?

- Security: no obvious leaks or injections.

Anything <4 on any line → ask for a revision.

13) Don’t get complacent (anti-drift habits)

- Two-pass rule: first pass for “does it run,” second pass for “do I understand it.”

- Diff-only requests: “Give me a patch against this file.” (Forces minimal change.)

- Weekly no-LLM hour: rebuild a tiny feature from scratch using docs only.

- Read source/docs first for core libs you rely on.

- Keep a “gotcha” log: every time the model fooled you, write it down and check it next time.

14) Useful prompt mini-library

A) Feature (minimal change)

Here’s my file and versions: … Goal: … Constraints: no new deps, keep function signatures. Acceptance: … Output: unified diff only; ≤30 lines. Explain 3 risks after the diff.

B) Debug

Minimal repro: … Error: (paste exact) Give 3 ranked causes with a 1-minute check for each. No code yet.

C) Refactor (surgical)

Keep behavior identical. Reduce branches and duplication. Add 1 small test for the trickiest branch. Show before/after complexity (lines, cyclomatic approx).

D) Explain

Teach me X with a real-world analogy, then a precise definition, then a 10-line example, then 3 quiz questions.

E) Tests from code

Read this function. Generate 6 tests: 3 normal, 2 edge, 1 error. Include expected outputs and reasons.

15) Ten-minute “safe usage” checklist

- State versions + environment.

- Ask for a plan first.

- Demand minimal diff or one small file.

- Paste into a sandbox/branch.

- Run a smoke test + one edge case (use logging/print statements to debug!).

- Add 1—2 asserts/guards.

- Scan for fake APIs/imports.

- Ask for doc links for anything non-obvious.

- Write a one-line risk note in the commit.

- If it feels too clever, ask for a dumber version.

Conclusion

“Vibe coding” isn’t just another passing tech meme—it’s a real shift in how prototypes, apps, and even production code get built in the era of LLMs. Used wisely, it can unlock massive productivity and lower the barriers for new developers. But, as with any shortcut, it comes with hidden costs: skill decay, fragile code, and a dangerous reliance on out-of-date AI knowledge. The best devs will treat vibe coding tools as accelerators, not crutches—pairing them with rigorous reviews, robust testing, and a sharp understanding of their own stack. Embrace the vibe, but don’t outsource your judgment. Your future codebase will thank you.